import csv

import pickle

with open("./NHIS_OPEN_GJ_2015.csv") as csvfile:

reader = csv.reader(csvfile)

header = next(reader, None)

#header = {header[i]:i for i in range(0, len(header))}

table = list()

for row in reader:

row = {header[i] : row[i] for i in range(len(header))}

if row["신장(5Cm단위)"] == "" or row["체중(5Kg 단위)"] == "" or row["허리둘레"] == "":

continue

bmi = round(1000 * int(row["신장(5Cm단위)"]) / (int(row["체중(5Kg 단위)"]) ** 2), 2)

label = 0

if bmi > 40:label = 3

elif bmi > 35:label = 2

elif bmi > 30:label = 1

nrow = {

"height":int(row["신장(5Cm단위)"]),

"weight":int(row["체중(5Kg 단위)"]),

"waist":int(row["허리둘레"]),

"overweight":label

}

table.append(nrow)

dic = []

for i in range(4):

v = [it for it in table if it["overweight"] == i]

print(len(v))

dic.append(v)

with open("./preprocessing2015.pkl","wb+") as fw:

pickle.dump(table, fw, protocol = pickle.HIGHEST_PROTOCOL)

import pickle

from matplotlib import pyplot as plt

from sklearn.neural_network import MLPClassifier

from sklearn.svm import SVC

clf= SVC(kernel='poly', probability=True)

x = pickle.load(open('preprocessing2015.pkl', "rb"))

y = pickle.load(open('preprocessing2016.pkl', "rb"))

x = [x[i] for i in range(len(x)) if i % 1000 == 0]

y = [y[i] for i in range(len(y)) if i % 1000 == 0]

data_test = [[row["waist"], row["height"]] for row in y]

labels_test = [row["overweight"] for row in y]

data_train = [[row["waist"], row["height"]] for row in x]

labels_train = [row["overweight"] for row in x]

## 3-1) training

clf.fit(data_train, labels_train)

## 3-2) prediction

prediction_test= clf.predict(data_test)

probability_test= clf.predict_proba(data_test)[:,1]

## 4. Evaluate the model

from sklearn.metrics import confusion_matrix, roc_auc_score

## 4-1) AUC

#auc= roc_auc_score(labels_test, probability_test)

## 4-2) Confusion matrix

#tn, fp, fn, tp= confusion_matrix(labels_test, prediction_test).ravel()

#accuracy = (tp+ tn) / (tn+ fp+ fn+ tp)

#sensitivity = tp/ (tp+ fn)

#specificity = tn/ (fp+ tn)

#precision = tp/ (tp+ fp)

#recall = tp/ (tp+ fn)

#fscore= 2 * precision * recall / (precision + recall)

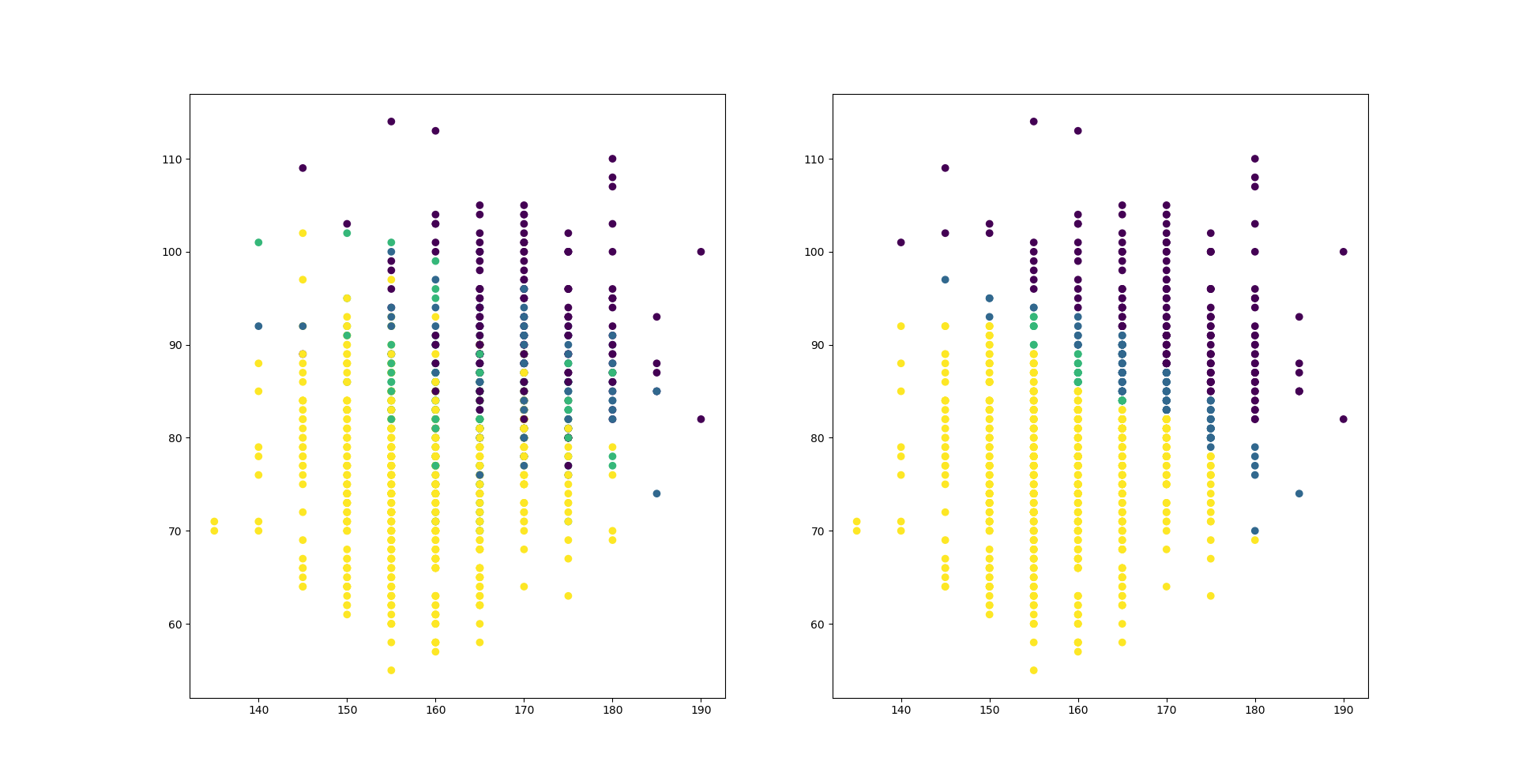

fig, axs = plt.subplots(1, 2, figsize=(1000, 1000))

axs[0].scatter([row[1] for row in data_test], [row[0] for row in data_test],s = None,c = labels_test)

axs[1].scatter([row[1] for row in data_test], [row[0] for row in data_test],s = None,c = prediction_test)

plt.show()

import pickle

from matplotlib import pyplot as plt

from sklearn.neural_network import MLPClassifier

clf= MLPClassifier(hidden_layer_sizes=(1024,256,32),

activation='logistic', # sigmoid

solver='adam', # adamgradient optimizer

learning_rate_init=0.01)

x = pickle.load(open('preprocessing2016.pkl', "rb"))

y = pickle.load(open('preprocessing2015.pkl', "rb"))

x = [x[i] for i in range(len(x)) if i % 100 == 0]

y = [y[i] for i in range(len(y)) if i % 100 == 0]

data_test = [[row["weight"], row["height"]] for row in y]

labels_test = [row["overweight"] for row in y]

data_train = [[row["weight"], row["height"]] for row in x]

labels_train = [row["overweight"] for row in x]

## 3-1) training

clf.fit(data_train, labels_train)

## 3-2) prediction

prediction_test= clf.predict(data_test)

probability_test= clf.predict_proba(data_test)[:,1]

## 4. Evaluate the model

from sklearn.metrics import confusion_matrix, roc_auc_score

## 4-1) AUC

#auc= roc_auc_score(labels_test, probability_test)

## 4-2) Confusion matrix

#tn, fp, fn, tp= confusion_matrix(labels_test, prediction_test).ravel()

accuracy = (tp+ tn) / (tn+ fp+ fn+ tp)

sensitivity = tp/ (tp+ fn)

specificity = tn/ (fp+ tn)

precision = tp/ (tp+ fp)

recall = tp/ (tp+ fn)

fscore= 2 * precision * recall / (precision + recall)

fig, axs = plt.subplots(1, 2, figsize=(1000, 1000))

axs[0].scatter([row[1] for row in data_test], [row[0] for row in data_test],s = None,c = labels_test)

axs[1].scatter([row[1] for row in data_test], [row[0] for row in data_test],s = None,c = prediction_test)

plt.show()

# 0. 사용할 패키지 불러오기

import pickle

from matplotlib import pyplot as plt

from keras.utils import np_utils

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Activation

import numpy

fig, axs = plt.subplots(1, 2, figsize=(512, 512))

# 1. 데이터셋 생성하기

#(x_train, y_train), (x_test, y_test) = mnist.load_data()

#x_train = x_train.reshape(60000, 784).astype('float32') / 255.0

#x_test = x_test.reshape(10000, 784).astype('float32') / 255.0

#y_train = np_utils.to_categorical(y_train)

#y_test = np_utils.to_categorical(y_test)

#print(x_train[0])

x = pickle.load(open('preprocessing2016.pkl', "rb"))

y = pickle.load(open('preprocessing2015.pkl', "rb"))

x = [x[i] for i in range(len(x)) ]

y = [y[i] for i in range(len(y)) if i % 10 == 0 ]

#waist = [ row["waist"] for row in x]

#waist_min = numpy.min(waist)

#waist_max = numpy.max(waist)

#waist = [(item - waist_min)/(waist_max - waist_min) for item in waist]

#height = [ row["height"] for row in x]

#height_min = numpy.min(height)

#height_max = numpy.max(height)

#height = [(item - height_min)/(height_max - height_min) for item in height]

test_data= numpy.array([[it["waist"], it["height"]] for it in y])

test_labels =[row["overweight"] for row in y]

train_data = numpy.array( [ [it["waist"], it["height"]] for it in x])

train_labels =np_utils.to_categorical([row["overweight"] for row in x],4)

#print("max: ",numpy.max( [row["weight"] for row in y]),"min: ",numpy.min( [row["weight"] for row in y]))

# 2. 모델 구성하기

model = Sequential()

model.add(Dense(1024, input_dim=2, activation="sigmoid"))

model.add(Dense(512, activation="sigmoid"))

model.add(Dense(128, activation="sigmoid"))

model.add(Dense(units=4, activation='softmax'))

# 3. 모델 학습과정 설정하기

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# 4. 모델 학습시키기

hist = model.fit(train_data, train_labels, epochs=20, batch_size=1024 * 10)

# 5. 학습과정 살펴보기

print('## training loss and acc ##')

print(hist.history['loss'])

print(hist.history['acc'])

# 6. 모델 평가하기

loss_and_metrics = model.evaluate(test_data, np_utils.to_categorical(test_labels,4), batch_size=1024 * 10)

print('## evaluation loss and_metrics ##')

print(loss_and_metrics)

# 7. 모델 사용하기

#xhat = x_test[0:1]

yhat = model.predict(test_data)

model = None

#print('## yhat ##')

#print(yhat)

from matplotlib import pyplot as plt

predict_set = [numpy.argmax(item) for item in yhat]

for i in range(4):

print(sum([1 for row in y if row["overweight"] == i]))

for i in range(4):

print(sum([1 for it in predict_set if it == i]))

axs[0].scatter([row[1] for row in test_data], [row[0] for row in test_data],s = None,c = test_labels)

axs[1].scatter([row[1] for row in test_data], [row[0] for row in test_data],s = None,c = predict_set)

plt.show()